musefully

musefully.org

https://github.com/derekphilipau/musefully

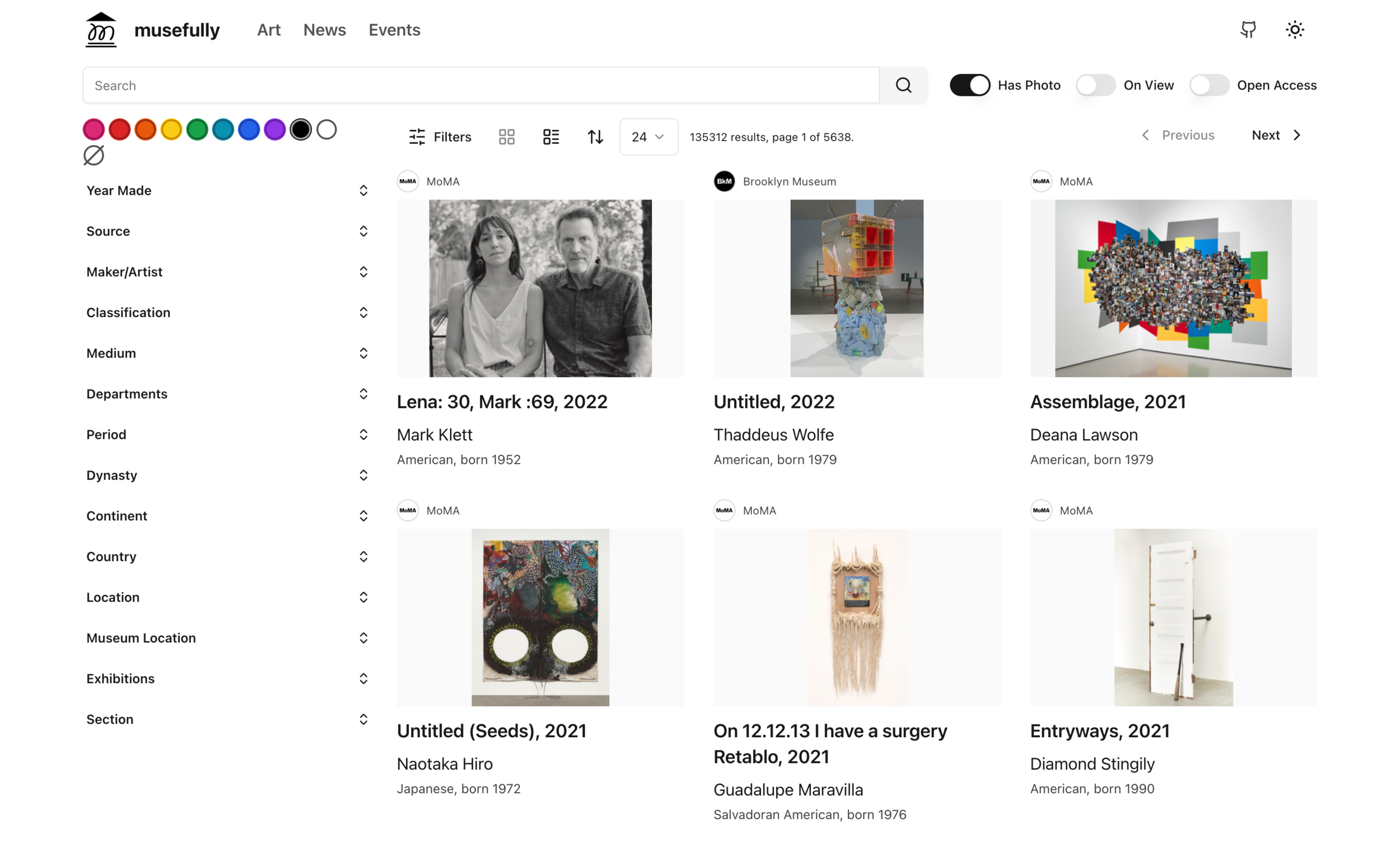

In an earlier project, museum-nextjs-search, I showed how one could use Elasticsearch & Next.js to build a performant, responsive and accessible faceted searche for online collections. Musefully takes this idea one step further, allowing one to ingest datasets from multiple sources.

Screenshot of musefully.org Art section in light mode.

Goals

Adaptable solution for any museum to build an online collections search from JSON or CSV data.

General-purpose collections, news & events search website (musefully.org) that allows a variety of audiences to search across open access museum collections.

Serve as experimental testing ground for technologies like OpenAI CLIP embeddings, OpenAI GPT API integrations, data visualizations, and more.

Next.js template

Based on https://github.com/shadcn/next-template (Website, UI Components), which is an implementation of Radix UI with Tailwind and other helpful utilities.

Features

Full-text search, including accession number

API Endpoints for search & document retrieval

Searchable filters

Linked object properties

Custom similarity algorithm with combined weighted terms (can be adjusted)

Dominant color similarity using HSV color space.

Embedded JSON-LD (Schema.org VisualArtwork) for better SEO and sharing

Image Zoom with Openseadragon

Image carousel with embla-carousel

Form handling via Formspree

Meta & OG meta tags

next-themes dark/light modes

@next/font font loading

Experimental Features

Similarity based on OpenAI CLIP Embeddings stored in Elasticsearch dense vectors worked well, but slowed down my mini server. (You can learn more about it here.), and the old code is feature-experimental-clip branch. The code here was used to add the embeddings via a Colab notebook, but it's a hack.